Artificial Intelligence (AI) is transforming industries across the globe, from healthcare and finance to education and manufacturing. However, as AI systems become increasingly complex, understanding how they make decisions has become a critical concern. This is where Explainable AI (XAI) comes into play. In this guide, we will explore what XAI is, why it matters, its benefits, and real-world applications.

What Is Explainable AI (XAI)?

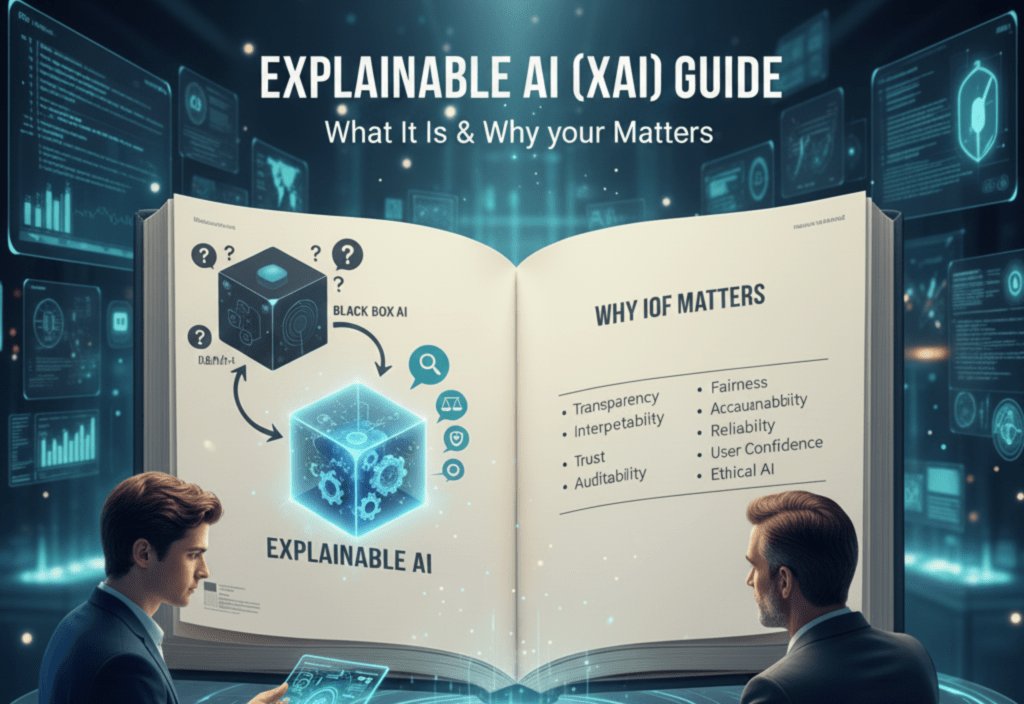

Explainable AI (XAI) refers to AI systems designed to provide clear, understandable explanations of their decisions, predictions, or recommendations. Traditional AI models, especially deep learning systems, are often considered “black boxes†because their internal workings are difficult to interpret. XAI aims to make these processes transparent so that humans can trust, verify, and effectively manage AI decisions.

In simple terms, XAI allows people to understand why an AI model produced a specific outcome, which is crucial for critical applications like medical diagnoses, financial predictions, and legal decisions.

Why Explainable AI Matters

1. Building Trust in AI Systems

Trust is a cornerstone of adopting AI technologies. When users and stakeholders understand how an AI system reaches a decision, they are more likely to rely on it. For example, doctors using AI for medical diagnoses need to know the reasoning behind predictions before making treatment decisions.

2. Ensuring Compliance and Accountability

Many industries are subject to strict regulations regarding transparency and fairness. XAI helps organizations comply with these standards by providing clear audit trails of AI decision-making processes. This is particularly important in sectors like finance, healthcare, and government.

3. Identifying Bias and Errors

AI systems can inadvertently develop biases based on the data they are trained on. Explainable AI makes it possible to detect and correct biased or inaccurate predictions, ensuring fairer outcomes and reducing ethical risks.

4. Improving AI Model Performance

By understanding how a model reaches its conclusions, developers can identify weaknesses, optimize algorithms, and improve overall performance. XAI not only makes AI more interpretable but also more effective and reliable.

Key Benefits of Explainable AI

-

Transparency:Â Clear understanding of AI decision-making processes.

-

Accountability:Â Organizations can justify AI-based decisions.

-

Fairness:Â Detects and corrects biases in AI models.

-

Efficiency:Â Helps developers fine-tune models for better results.

-

User Confidence:Â Encourages adoption by increasing trust in AI solutions.

Overall, XAI bridges the gap between AI’s complexity and human comprehension, enabling responsible and ethical AI deployment.

Real-World Applications of Explainable AI

1. Healthcare

In healthcare, XAI is critical for AI-assisted diagnostics. Doctors need transparent explanations of AI recommendations to make informed decisions and gain patient trust.

2. Finance

Banks and financial institutions use XAI for credit scoring, fraud detection, and investment predictions. Explainable decisions help meet regulatory requirements and prevent legal issues.

3. Autonomous Vehicles

Self-driving cars rely on AI for navigation and decision-making. XAI ensures that developers can understand vehicle decisions in complex traffic scenarios, enhancing safety and reliability.

4. Legal and Compliance

XAI assists legal professionals by providing transparent insights into AI predictions in areas like risk assessment and case analysis, ensuring ethical and fair use.

5. Business and Marketing

Companies use XAI to analyze customer behavior, personalize recommendations, and optimize marketing strategies. Transparent AI models help justify business decisions to stakeholders.

Challenges in Explainable AI

Despite its benefits, XAI faces several challenges:

-

Complexity of AI Models:Â Deep learning models are inherently difficult to interpret.

-

Trade-Off Between Accuracy and Explainability:Â Highly complex models may provide better predictions but are harder to explain.

-

Standardization Issues:Â There is no universal standard for explainability, making implementation inconsistent.

However, ongoing research and development are continuously addressing these challenges, making XAI more practical and accessible.

The Future of Explainable AI

The future of XAI lies in creating AI systems that are both highly accurate and fully interpretable. As AI adoption increases, industries will demand transparency, ethical accountability, and user-friendly explanations. Emerging tools and frameworks are already enabling developers to build more interpretable models without compromising performance.

In addition, integrating XAI with other AI technologies will allow organizations to deploy AI responsibly, ensuring both innovation and trust.

Conclusion

Explainable AI (XAI) is no longer optional; it is essential for the responsible and ethical deployment of AI systems. By providing transparency, accountability, and trust, XAI empowers industries to make smarter, fairer, and safer decisions. From healthcare and finance to autonomous vehicles and business analytics, XAI ensures that AI technologies are understandable, reliable, and beneficial for all stakeholders.

Ultimately, the future of AI depends on our ability to understand it—and that is exactly what Explainable AI makes possible.

For more article submission site click here